Optimized Hot and Outlier Spot Analysis, Similarity Search Tools

Optimized Hot Spot Analysis

How to use Optimized Hot Spot Analysis Tool in Arc Toolbox??

|

| Optimized Hot Spot Analysis |

Path to access the tool

:

Optimized

Hot Spot Analysis Tool, Mapping Clusters Toolset,

Spatial Statistics Tools Toolbox

Optimized Hot Spot Analysis

Given incident points or

weighted features (points or polygons), creates a map of statistically

significant hot and cold spots using the Getis-Ord Gi* statistic. It evaluates

the characteristics of the input feature class to produce optimal results.

1. Input Features

The point or polygon

feature class for which hot spot analysis will be performed.

2. Output Features

The output feature class

to receive the z-score, p-value, and Gi_Bin results.

3. Analysis Field (optional)

The numeric field

(number of incidents, crime rates, test scores,

and so on) to be evaluated.

4. Incident Data Aggregation Method (optional)

The aggregation method to use to create weighted features for analysis from incident point data.

- COUNT_INCIDENTS_WITHIN_FISHNET_POLYGONS—A fishnet polygon mesh will overlay the incident point data and the number of incidents within each polygon cell will be counted. If no bounding polygon is provided in the Bounding Polygons Defining Where Incidents Are Possible parameter, only cells with at least one incident will be used in the analysis; otherwise, all cells within the bounding polygons will be analyzed.

- COUNT_INCIDENTS_WITHIN_HEXAGON_POLYGONS —A hexagon polygon mesh will overlay the incident point data and the number of incidents within each polygon cell will be counted. If no bounding polygon is provided in the Bounding Polygons Defining Where Incidents Are Possible parameter, only cells with at least one incident will be used in the analysis; otherwise, all cells within the bounding polygons will be analyzed.

- COUNT_INCIDENTS_WITHIN_AGGREGATION_POLYGONS—You provide aggregation polygons to overlay the incident point data in the Polygons For Aggregating Incidents Into Counts parameter. The incidents within each polygon are counted.

- SNAP_NEARBY_INCIDENTS_TO_CREATE_WEIGHTED_POINTS—Nearby incidents will be aggregated together to create a single weighted point. The weight for each point is the number of aggregated incidents at that location.

5. Bounding Polygons Defining Where Incidents Are Possible (optional)

A polygon feature class

defining where the incident Input Features could possibly occur.

6. Polygons For Aggregating Incidents Into Counts (optional)

The polygons to use to

aggregate the incident Input Features in order to get an incident count for

each polygon feature.

7. Density Surface (optional)

The Density Surface

parameter is disabled; it remains as a tool parameter only to support backwards

compatibility. The Kernel Density tool can be used if you would like a density

surface visualization of your weighted points.

8. Cell Size (optional)

The size of the grid

cells used to aggregate the Input Features. When aggregating into a hexagon

grid, this distance is used as the height to construct the hexagon polygons.

9. Distance Band (optional)

The spatial extent of

the analysis neighborhood. This value determines which features are analyzed

together in order to assess local clustering.

This tool only supports

kilometers, meters, miles and feet.

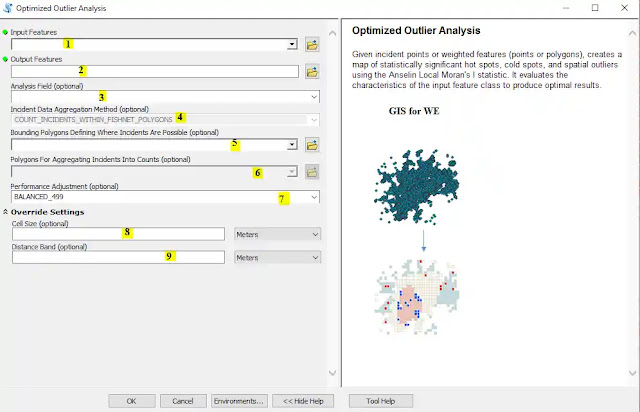

Optimized Outlier Analysis

How to use Optimized Outlier Analysis Tool in Arc Toolbox??

|

| Optimized Outlier Analysis |

Path to access the tool

:

Optimized

Outlier Analysis Tool, Mapping Clusters Toolset,

Spatial Statistics Tools Toolbox

Optimized Outlier Analysis

Given incident points or

weighted features (points or polygons), creates a map of statistically

significant hot spots, cold spots, and spatial outliers using the Anselin Local

Moran's I statistic.

It evaluates the characteristics of the input feature class

to produce optimal results.

1. Input Features

The point or polygon

feature class for which the cluster and outlier analysis will be performed.

2. Output Features

The output feature class

to receive the result fields.

3. Analysis Field (optional)

The numeric field

(number of incidents, crime rates, test scores, and so on) to be evaluated.

4. Incident Data Aggregation Method (optional)

The aggregation method to use to create weighted features for analysis from incident point data.

- COUNT_INCIDENTS_WITHIN_FISHNET_POLYGONS—A fishnet polygon mesh will overlay the incident point data and the number of incidents within each polygon cell will be counted. If no bounding polygon is provided in the Bounding Polygons Defining Where Incidents Are Possible parameter, only cells with at least one incident will be used in the analysis; otherwise, all cells within the bounding polygons will be analyzed.

- COUNT_INCIDENTS_WITHIN_HEXAGON_POLYGONS—A hexagon polygon mesh will overlay the incident point data and the number of incidents within each polygon cell will be counted. If no bounding polygon is provided in the Bounding Polygons Defining Where Incidents Are Possible parameter, only cells with at least one incident will be used in the analysis; otherwise, all cells within the bounding polygons will be analyzed.

- COUNT_INCIDENTS_WITHIN_AGGREGATION_POLYGONS—You provide aggregation polygons to overlay the incident point data in the Polygons For Aggregating Incidents Into Counts parameter. The incidents within each polygon are counted.

- SNAP_NEARBY_INCIDENTS_TO_CREATE_WEIGHTED_POINTS—Nearby incidents will be aggregated together to create a single weighted point. The weight for each point is the number of aggregated incidents at that location.

5. Bounding Polygons Defining Where Incidents Are Possible (optional)

A polygon feature class

defining where the incident Input Features could possibly occur.

6. Polygons For Aggregating Incidents Into Counts (optional)

The polygons to use to

aggregate the incident Input Features in order to get an incident count for

each polygon feature.

7. Performance Adjustment (optional)

This analysis utilizes permutations to create a reference distribution. Choosing the number of permutations is a balance between precision and increased processing time. Choose your preference for speed versus precision. More robust and precise results take longer to calculate.

- QUICK_199—With 199 permutations, the smallest possible pseudo p-value is 0.005 and all other pseudo p-values will be even multiples of this value.

- BALANCED_499—With 499 permutations, the smallest possible pseudo p-value is 0.002 and all other pseudo p-values will be even multiples of this value.

- ROBUST_999—With 999 permutations, the smallest possible pseudo p-value is 0.001 and all other pseudo p-values will be even multiples of this value.

8. Cell Size (optional)

The size of the grid

cells used to aggregate the Input Features. When aggregating into a hexagon

grid, this distance is used as the height to construct the hexagon polygons.

This tool only supports

kilometers, meters, miles and feet.

9. Distance Band (optional)

The spatial extent of

the analysis neighborhood. This value determines which features are analyzed

together in order to assess local clustering.

This tool only supports

kilometers, meters, miles and feet.

Similarity Search

How to use Similarity Search Tool in Arc Toolbox??

|

| Similarity Search |

Path to access the tool

:

Similarity

Search Tool, Mapping Clusters Toolset, Spatial Statistics Tools Toolbox

Similarity Search

Identifies which candidate features are most similar or most dissimilar to one or more input features based on feature attributes.

1. Input Features To Match

The layer (or a

selection on a layer) containing the features you want to match; you are

searching for other features that look like these features. When more than one

feature is provided, matching is based on attribute averages.

Tip: When your Input Features To Match and Candidate Features come from a single dataset

- Select the reference features you want to match.

- Right-click the layer and choose Selection followed by Create Layer From Selected Features. Use the new layer created for this parameter.

- Right-click the layer again and choose Selection followed by Switch Selection to get the layer you will use for your Candidate Features.

2. Candidate Features

The layer (or a

selection on a layer) containing candidate matching features. The tool will

look for features most like (or most dislike) the Input Features To Match among

these candidates.

Tip: When your Input Features To Match and Candidate Features come from a single dataset

- Select the reference features you want to match.

- Right-click the layer and choose Selection followed by Create Layer From Selected Features. Use the new layer created for the Input Features To Match parameter.

- Right-click the layer again and choose Selection followed by Switch Selection to get the layer you will use for this parameter.

3. Output Features

The output feature class

contains a record for each of the Input Features To Match and for all the

solution-matching features found.

4. Collapse Output To Points

When the Input Features To Match and the Candidate Features are both either lines or polygons, you may choose whether you want the geometry for the Output Features to be collapsed to points or to match the original geometry (lines or polygons) of the input features. The option to match the original geometry is only available with an Desktop Advanced license. Checking this on for large line or polygon datasets will improve tool performance.

- Checked—The line and polygon features will be represented as feature centroids (points).

- Unchecked—The output geometry will match the line or polygon geometry of the input features. This is the default.

5. Most Or Least Similar

Choose whether you are interested in features that are most alike or most different to the Input Features To Match.

- MOST_SIMILAR—Find the features that are most alike.

- LEAST_SIMILAR—Find the features that are most different.

- BOTH—Find both the features that are most alike and the features that are most different.

6. Match Method

Choose whether matching should be based on values, ranks, or cosine relationships.

- ATTRIBUTE_VALUES—Similarity or dissimilarity will be based on the sum of squared standardized attribute value differences for all the Attributes Of Interest.

- RANKED_ATTRIBUTE_VALUES—Similarity or dissimilarity will be based on the sum of squared rank differences for all the Attributes Of Interest.

- ATTRIBUTE_PROFILES—Similarity or dissimilarity will be computed as a function of cosine similarity for all the Attributes Of Interest.

7. Number Of Results

The number of solution

matches to find. Entering zero or a number larger than the total number of

Candidate Features will return rankings for all the candidate features.

8. Attributes Of Interest

A list of numeric

attributes representing the matching criteria.

9. Fields To Append To Output (optional)

An optional list of attributes to include with the Output Features. You might want to include a name identifier, categorical field, or date field, for example. These fields are not used to determine similarity; they are only included in the Output Features for your reference.

Comments

Post a Comment